Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Is there a way to get a list of all pages of your website that are indexed in Google?

-

I am trying to put together a comprehensive list of all pages that are indexed in Google and have differing opinions on how to do this.

-

And if people don't have Search Console access, they can always try queries like:

https://www.google.com/search?q=site%3Abbc.co.uk

... however, due to the difficulty in exporting all the listed URLs, and due to some anti-scraping measures by Google, this data tends to be inferior to that supplied by Search Console. Always use Google's official tools first

-

There are a number of different ways, but I'd always start with Google Search Console Coverage reports.

If you haven't already, sign up and configure Search Console.

Then, go to your property > Coverage.

Then select the Valid "tab". You'll then be able to click on the two types of valid ("Submitted and indexed" and "Indexed, not submitted in sitemap"). Within each of these categories, you'll be able to download a CSV of the pages lists.

In the same area you'll be able to see lists of pages that Google knows about but hasn't indexed for one reason or another.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Why Google de-rank a website.

Hi, I was inspecting a website which is covering the topic of best wheelbarrow of 2021, it is a new website and and starts ranking on google. But, after few days it got de-rank automatically and Moz is also not showing any result to that. I was wandering why this just happened and what should I do if I made my website and will not face this kind of situation?

Technical SEO | | Moeen22330 -

My video sitemap is not being index by Google

Dear friends, I have a videos portal. I created a video sitemap.xml and submit in to GWT but after 20 days it has not been indexed. I have verified in bing webmaster as well. All videos are dynamically being fetched from server. My all static pages have been indexed but not videos. Please help me where am I doing the mistake. There are no separate pages for single videos. All the content is dynamically coming from server. Please help me. your answers will be more appreciated................. Thanks

Technical SEO | | docbeans0 -

Removed Product page on our website, what to do

We just removed an entire product category on our website, (product pages still exist, but will be removed soon as well) Should we be setting up re-directs, or can we simply delete this category and product

Technical SEO | | DutchG

pages and do nothing? We just received this in Google Webmasters tools: Google detected a significant increase in the number of URLs that return a 404 (Page Not Found) error. We have not updated the sitemap yet...Would this be enough to do or should we do more? You can view our website here: http://tinyurl.com/6la8 We removed the entire "Spring Planted Category"0 -

Image Indexing Issue by Google

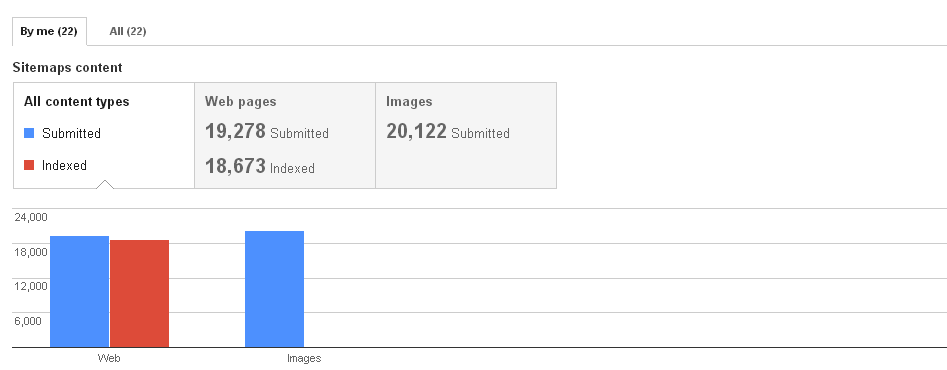

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Best way to handle pages with iframes that I don't want indexed? Noindex in the header?

I am doing a bit of SEO work for a friend, and the situation is the following: The site is a place to discuss articles on the web. When clicking on a link that has been posted, it sends the user to a URL on the main site that is URL.com/article/view. This page has a large iframe that contains the article itself, and a small bar at the top containing the article with various links to get back to the original site. I'd like to make sure that the comment pages (URL.com/article) are indexed instead of all of the URL.com/article/view pages, which won't really do much for SEO. However, all of these pages are indexed. What would be the best approach to make sure the iframe pages aren't indexed? My intuition is to just have a "noindex" in the header of those pages, and just make sure that the conversation pages themselves are properly linked throughout the site, so that they get indexed properly. Does this seem right? Thanks for the help...

Technical SEO | | jim_shook0 -

Pages removed from Google index?

Hi All, I had around 2,300 pages in the google index until a week ago. The index removed a load and left me with 152 submitted, 152 indexed? I have just re-submitted my sitemap and will wait to see what happens. Any idea why it has done this? I have seen a drop in my rankings since. Thanks

Technical SEO | | TomLondon0 -

De-indexing millions of pages - would this work?

Hi all, We run an e-commerce site with a catalogue of around 5 million products. Unfortunately, we have let Googlebot crawl and index tens of millions of search URLs, the majority of which are very thin of content or duplicates of other URLs. In short: we are in deep. Our bloated Google-index is hampering our real content to rank; Googlebot does not bother crawling our real content (product pages specifically) and hammers the life out of our servers. Since having Googlebot crawl and de-index tens of millions of old URLs would probably take years (?), my plan is this: 301 redirect all old SERP URLs to a new SERP URL. If new URL should not be indexed, add meta robots noindex tag on new URL. When it is evident that Google has indexed most "high quality" new URLs, robots.txt disallow crawling of old SERP URLs. Then directory style remove all old SERP URLs in GWT URL Removal Tool This would be an example of an old URL:

Technical SEO | | TalkInThePark

www.site.com/cgi-bin/weirdapplicationname.cgi?word=bmw&what=1.2&how=2 This would be an example of a new URL:

www.site.com/search?q=bmw&category=cars&color=blue I have to specific questions: Would Google both de-index the old URL and not index the new URL after 301 redirecting the old URL to the new URL (which is noindexed) as described in point 2 above? What risks are associated with removing tens of millions of URLs directory style in GWT URL Removal Tool? I have done this before but then I removed "only" some useless 50 000 "add to cart"-URLs.Google says themselves that you should not remove duplicate/thin content this way and that using this tool tools this way "may cause problems for your site". And yes, these tens of millions of SERP URLs is a result of a faceted navigation/search function let loose all to long.

And no, we cannot wait for Googlebot to crawl all these millions of URLs in order to discover the 301. By then we would be out of business. Best regards,

TalkInThePark0 -

Dynamically-generated .PDF files, instead of normal pages, indexed by and ranking in Google

Hi, I come across a tough problem. I am working on an online-store website which contains the functionlaity of viewing products details in .PDF format (by the way, the website is built on Joomla CMS), now when I search my site's name in Google, the SERP simply displays my .PDF files in the first couple positions (shown in normal .PDF files format: [PDF]...)and I cannot find the normal pages there on SERP #1 unless I search the full site domain in Google. I really don't want this! Would you please tell me how to figure the problem out and solve it. I can actually remove the corresponding component (Virtuemart) that are in charge of generating the .PDF files. Now I am trying to redirect all the .PDF pages ranking in Google to a 404 page and remove the functionality, I plan to regenerate a sitemap of my site and submit it to Google, will it be working for me? I really appreciate that if you could help solve this problem. Thanks very much. Sincerely SEOmoz Pro Member

Technical SEO | | fugu0